Categoría: Artículos

Autor: Martín Jurado

Chief Data and Analytics Officer de Zurich Insurance

31 octubre, 2023

. 10 minutos de lectura.

In today’s digital age, the business world is experiencing a revolution driven by applications that operate in real-time and respond to specific events. These applications have become a vital element in a wide range of industries, transforming how companies interact with data and make decisions. That is why various business sectors join the use of tools that help satisfy their needs and respond to their users, employees, or market demands.

From financial transactions that occur in milliseconds to instant personalization in online commerce, real-time monitoring of patient health, or optimization of transportation routes, real-time and event-based applications are driving efficiency, customer satisfaction, and competitiveness in the markets. This is just a look at how these applications are reshaping the way businesses operate and provide services.

A fundamental line in this technological revolution is Apache Flink and other similar tools, which allow the efficient processing of data streams in real-time. These technologies are at the forefront of business transformation, providing organizations with the ability to make decisions based on up-to-date data and to act quickly on constantly evolving events and the future of business driven by real-time data!

But what are real-time applications or event-driven applications, what are their characteristics and how can we take advantage of them?

Event-Driven Applications

Event-driven applications are those that are designed to respond to specific events or happenings rather than following a linear execution flow. These events can be triggered by user interaction, changes in the environment, or data generated by other systems. In this approach, applications react quickly and efficiently to events, making them ideal for situations where speed and scalability are critical. These applications are used in a wide variety of cases, from real-time data processing to messaging and automation systems.

- Focus on Event Handling: Event-driven applications are primarily designed to respond to specific events or occurrences. These events can be user interactions, changes in the environment, or data generated by other systems.

- Asynchronous Processing: These applications are inherently asynchronous. They don’t follow a linear execution flow but instead wait for events to trigger their actions. When an event occurs, the application reacts promptly.

- Stateless or Stateful: They can be both stateless and stateful. In stateless event-driven systems, each event is processed independently without knowledge of past events. In stateful systems, they maintain context and can remember previous events.

- Flexibility: Event-driven applications are highly adaptable and can handle a wide range of use cases. They excel in scenarios where responses need to be quick and varied based on different events.

- Examples: Web applications that respond to user clicks, IoT systems that react to sensor data, chatbots that reply to user messages, and email notification systems are examples of event-driven applications.

Real-Time Applications

Real-time applications are those that process and deliver information virtually instantly, with minimal latency. These applications are critical in environments where decision-making and quick action are essential, such as online commerce, fraud detection, systems monitoring, and more. They can involve real-time visualization of data, continuous processing of data streams, and making instant decisions based on real-time events.

- Continuous Data Processing: Real-time applications are focused on processing and delivering information with minimal latency. They deal with streams of data that arrive continuously and need to be processed as soon as possible.

- Latency Consideration: They prioritize low latency. These systems aim to minimize the delay between the occurrence of an event or data point and the application’s response. This is crucial for applications like stock trading or live video streaming.

- Often Stateless: These applications are typically stateless. They process data as it comes in without maintaining a long-term memory of past data. This is different from event-driven applications, which may retain state for a period.

- Examples: Stock market tickers, online multiplayer games, traffic monitoring systems, and live sports scoreboards are examples of real-time applications.

But what is Apache Flink?

Stateful Computations over Data Streams

Apache Flink is a real-time and batch data processing engine that has had a significant impact in the business context. Some of the reasons why many enterprise architectures are considering it include:

- Real-Time Processing: Flink enables real-time processing of data streams, meaning businesses can make real-time decisions based on current events. This is crucial in industries such as finance, online advertising, and healthcare.

- Scalability: Flink is highly scalable and can handle large volumes of data, which is essential in an increasingly data-driven business world.

- Fault Tolerance: Flink provides advanced fault tolerance mechanisms, ensuring that applications continue to function even under adverse conditions.

- Connectivity to Various Data Sources: It can connect to a variety of data sources, making it suitable for applications that require integration with multiple systems.

- Flexibility: Flink is a versatile tool that can adapt to a wide range of use cases, from data processing to advanced analytics.

Processing Data Streams — Unbounded and Bounded with Apache Flink

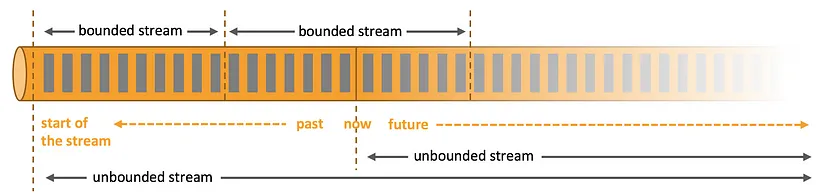

First and foremost, it’s crucial to understand that all types of data are essentially generated as a continuous stream of events as a “data stream.” it’s important to clarify the concept of unbounded streams and bounded streams before diving into their relevance to Apache Flink.

This data stream can be processed in two fundamental ways: as unbounded streams or bounded streams:

- Unbounded Streams: In the case of unbounded streams, there’s a clear starting point, but there’s no defined endpoint. These streams persist indefinitely, continuously providing data as it’s generated. It’s essential to process events quickly as they’re ingested since there’s no waiting for all data to arrive — the flow is never-ending. Typically, processing unbounded data requires maintaining the order of events, meaning processing them in the order they occurred, to ensure result integrity.

- Bounded Streams: In contrast, bounded streams have both a well-defined starting point and an endpoint. This implies that you can ingest and collect all the data before performing any calculations. There’s no strict need to maintain a specific order when ingesting data in bounded streams, as the dataset is finite and can always be sorted. This approach to processing is commonly known as “batch processing.”

Apache Flink excels in processing both types of data streams (unbounded and bounded):

- Unbounded Streams: Apache Flink provides precise control over time and state, enabling its runtime to run any kind of application on unbounded streams. This means it’s highly proficient in real-time data processing, where speed and continuity are essential.

- Bounded Streams: For bounded streams, Apache Flink employs algorithms and data structures specifically designed for fixed-sized datasets, resulting in excellent performance.

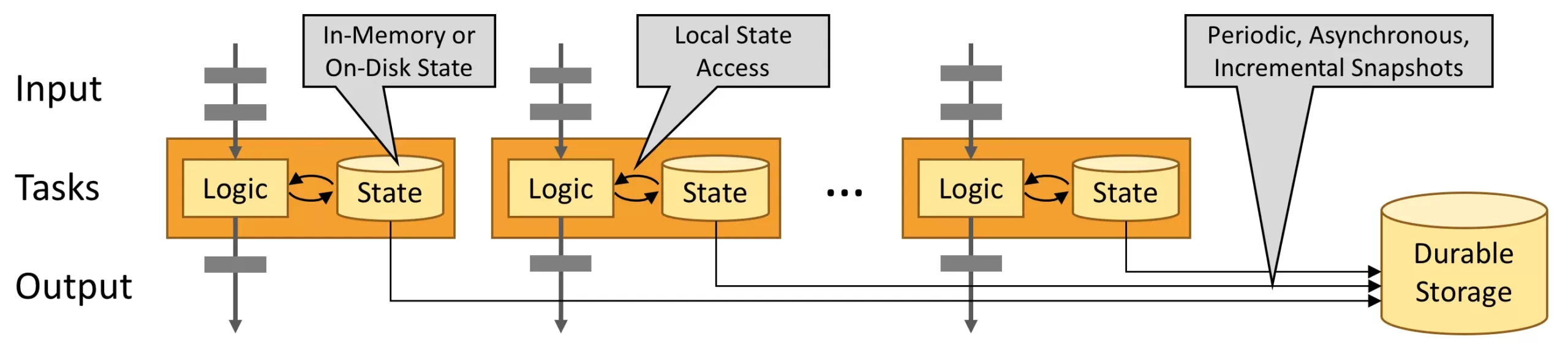

Also like the bounded and unbounded context, it’s crucial to understand that Flink applications can be designed to maintain state, meaning they can store and access information during their execution. (Leverage In_memory Performance). This state can be used for various purposes, like aggregating data or remembering past events.

Leverage In-Memory Performance

Stateful Flink applications are optimized for local state access:When we say “stateful Flink applications,” we’re referring to applications that make use of this stored state. These applications are designed to be highly efficient when accessing this state information.

Task state is always maintained in memory or, if the state size exceeds the available memory, in access-efficient on-disk data structures: In Flink, the state can be stored either in memory (RAM) or, if the size of the state data is too large to fit in memory, it can be stored in on-disk data structures. This flexibility allows Flink to handle large state data efficiently, even when it can’t all be stored in memory.

Hence, tasks perform all computations by accessing local, often in-memory, state, yielding very low processing latencies: The tasks within a Flink application perform their computations by accessing this state information. When the state is in memory (which is often the case for optimal performance), it results in very low processing latencies. This means that tasks can quickly access and update the state data, which is crucial for real-time or low-latency processing.

Flink guarantees exactly-once state consistency in case of failures by periodically and asynchronously checkpointing the local state to durable storage: Even in scenarios where failures occur, Flink ensures the consistency of the stored state. It achieves this by periodically creating checkpoints of the state data and saving them to durable storage. This means that, in case of a system failure, Flink can recover the state to a consistent point in time, allowing the application to continue processing without data loss or duplication.

Hello world! with Apache Flink

Creating your first Apache Flink application is an exciting step into the world of stream processing. To keep things simple, let’s build a basic “Hello, World!” Flink application that processes a stream of words and counts the occurrences of each word. We’ll use Java for this example.

Step 1: Set Up Your Development Environment

Before you start coding, make sure you have Apache Flink installed, and your development environment is ready. You can download Apache Flink from the official website.

https://nightlies.apache.org/flink/flink-docs-stable/docs/try-flink/local_installation/

Step 2: Create a New Maven Project

In your development environment, create a new Maven project and add the Flink dependency. Your pom.xml should include the following dependency:

Step 3: Write Your Flink Application

Now, create your Flink application. Here’s a simple “Hello, World!” example:

The line of code “//Create a data stream from a list of words DataStream<String>” is used here as a simple example to illustrate how to create a data stream from a static list of words. However, in a real-world environment, you can and should typically obtain data from various real-time sources, such as Kafka, messaging systems, real-time databases, sensors, web applications, or any source that generates data continuously. Apache Flink is highly versatile and can connect to a wide range of real-time data sources for processing.

To obtain data from an external source, you usually use connectors or custom sources provided by Flink or developed by yourself. For example, Flink provides ready-to-use connectors for Kafka, Apache Pulsar, AWS Kinesis, and many other popular sources. You can also develop custom connectors to integrate with specific systems.

Flink’s flexibility in data ingestion is one of the aspects that make it a powerful tool for real-time stream data processing, as it can adapt to a wide variety of use cases and data sources in the business world.

This code takes a stream of words, breaks them down into individual components, counts how many times each word appears, and then displays the results in the console. It’s a basic example of real-time data processing with Apache Flink and is commonly used as an introduction to the Flink platform.

Step 4: Run Your Application

Build your project and execute the Flink job. You should see the word count results printed on the console.

This basic example demonstrates the fundamental structure of a Flink application, including setting up the execution environment, creating a data stream, applying transformations, and executing the job.

Feel free to expand on this example and explore more advanced Flink features as you become more familiar with the platform. Happy Flinking!

In conclusion, Apache Flink is a transformative force in the real-time and event-driven application landscape, enabling businesses to harness the power of data to make immediate, informed decisions and adapt swiftly to dynamic, data-driven markets. Its versatility, scalability, and fault tolerance make it a cornerstone in the ever-evolving world of real-time data processing. The future of business is no longer shaped by yesterday’s data but by the real-time insights gained from continuous streams of events.

Reference:

- https://flink.apache.org/

- Designing Data-Intensive Applications. M. Kleppmann. O’Reilly, Beijing, (2017 ).